Aesop: Digital Storytelling

Block-based programming tool that allows storytellers to quickly author immersive digital experiences.

Tags

UI/UX Design

Development

UX Research

Timeline

Jun - Aug 2019

Tools & Stack

Javascript

Firebase

Raspberry Pi

Python

HTML, CSS

Roles

UX Designer

UX Researcher

Full-Stack Developer

Overview

Modern forms of storytelling, from theatre to Disney shows, leverage technology and multimedia such as audio, animations, animatronics, lighting, and more to create an engaging and immersive experience. Translating such experiences into everyday storytelling for parents and teachers is, however, an arduous task requiring multiple technical skills.

Problem

How do we build a tool that allows people to incorporate complex multimedia into everyday storytelling?

Design

Design Considerations

The goal was to enable users to incorporate different modalities of digital storytelling to their personal experience. Taking inspiration from modern shows, theatre, theme parks, and 4D cinema, we listed the different output capabilities for our tool allowing storytellers to experiment with their creativity.

Next, we considered how users might want to actuate these actions while reading the story. On reviewing some typical storytelling sessions, we realised storytellers often use various forms of expression besides speech such as facial expressions and gestures. We saw an opportunity to use these elements as cues to associate multiple actions with.

Mapping out different inputs and outputs

As an example, a storyteller could say — "It was a sunny day" which would change the room's lighting to bright yellow and play an audio of chirping birds in the background. Or a sad face expression from the storyteller could cue the animation of rain and turn the fan on to simulate wind.

After establishing the goals, we tried to understand how one would program these cues and outputs. We started with assuming a user personna as someone with no prior programming experience with the motivation of narrating a story supported by the above mentioned multimedia modalities.

We saw that this process could be clearly broken down into three stages:

Three stages for the user journey

The user journey would start by writing/selecting the central aspect of the tool — the story. Next, the user would program the different actions relevant to the selected story. Finally, one would simply read the story as intended while observing the programmed actions come to life.

Development

The Implementation

The development process was quite iterative. It started with building modules from the base wireframes but evolved constantly by adding small functionalities that felt necessary as the tool materialized to a real usable interface.

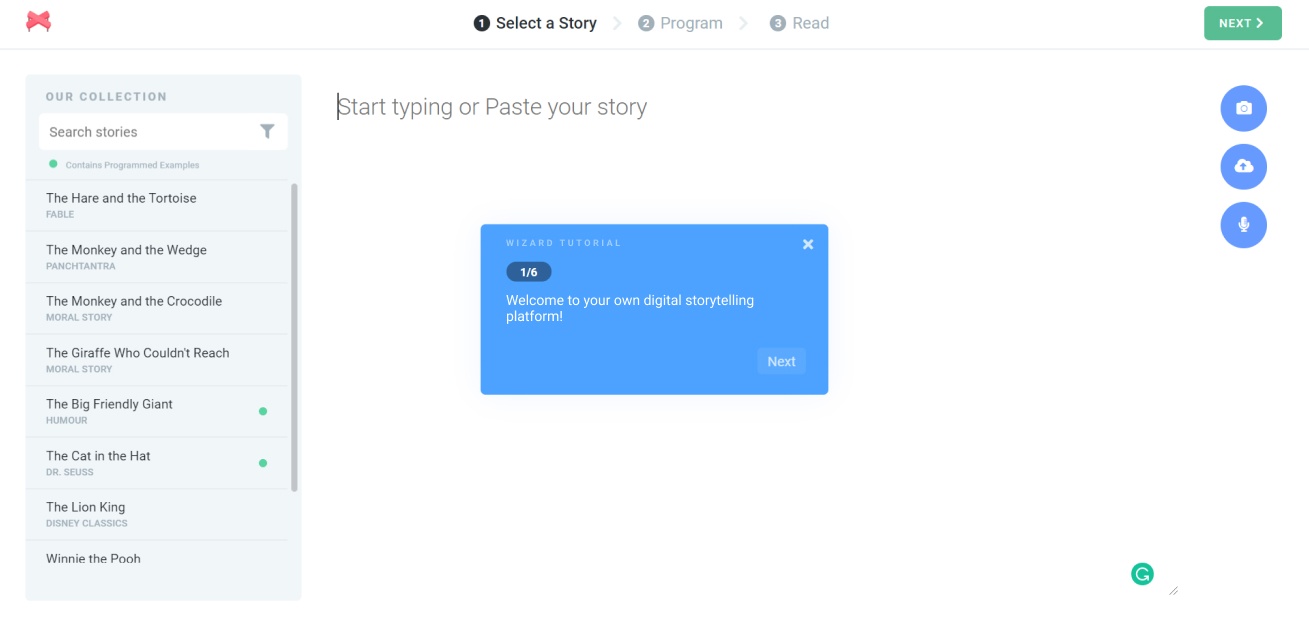

1. Selecting the story

Story selection/writing screen

We determined multiple ways in which a user might wish to enter a story. One could either simply type a story, or paste one from the internet. One could also want to take a picture out of a storybook, upload a text file, or even narrate. We implemented all these options for the user to enter their story using popular speech recognition, OCR, and text extracting APIs. The design of this screen is essentially a text editor to place all the emphasis on the task at hand. In addition, we provided Aesop with its own database of stories for users to select from saving them the trouble of browsing through external sources to get started.

To increase the usability of a new system like Aesop, we provided a wizard tutorial as a quick walkthrough guide. Later, we use the same wizard modal during the programming step to succintly describe the functionalities Aesop provides.

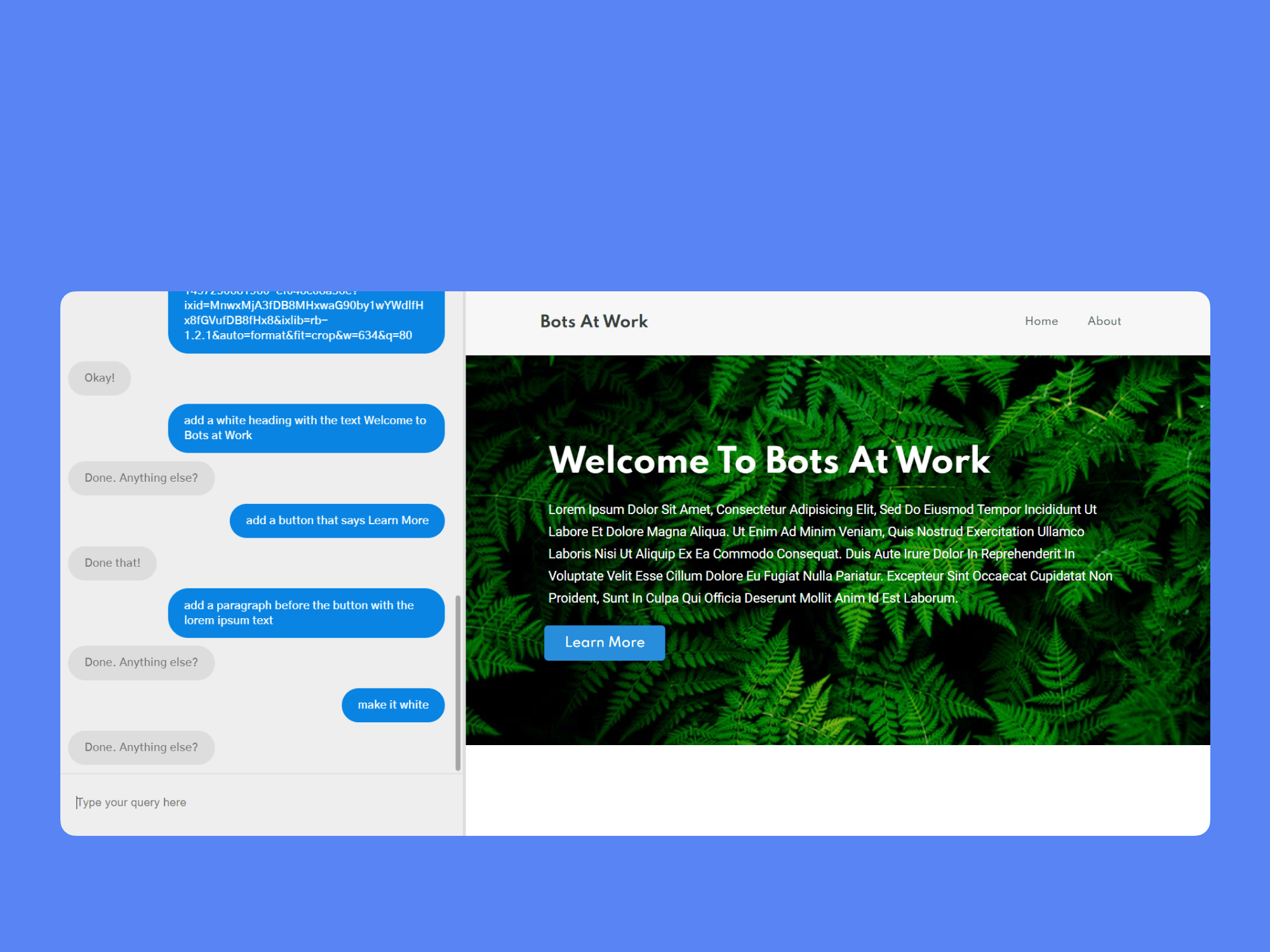

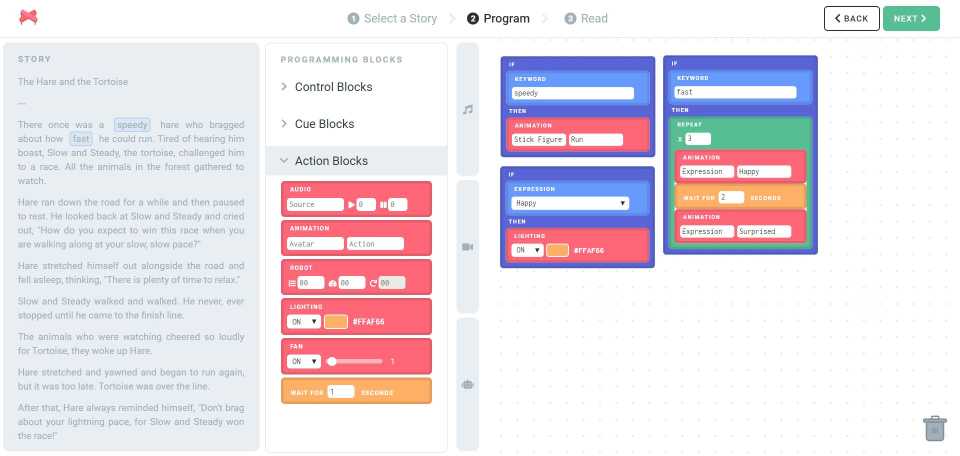

2. Programming using a block-based environment

Block based programming screen

This step enables a user to be creative and connect the elements of multimedia storytelling with their chosen story. To accomplish the task of lowering the barrier of developing such complex modules, while providing programming-like freedom, we took inspiration from block-based visual programming. Tools like MIT's Scratch, Blockly, and other block-based authoring tools are often used to help novice programmers and children utilize programming capabilities in an interactive, visual construct.

We ensured that the user experience was designed in a way to correctly guide the user, preventing possible errors instead of correcting them. For example, the user could not drag an action block into the if condition space or use blocks with blank values. Alongside, a compiler-like check was implemented to filter logical errors before proceeding to the reading environment.

We also provided subtle feedback responses for the user to their actions. For example, adding a keyword cue in the programming space highlights that word in the reference story. Newly generated blocks are highlighted with a glow animation to draw attention and indicate success.

All the logic for implementing this interface is written in vanilla Javascript and jQuery (a bit old school, eh?) from scratch (not MIT Scratch). The program created using blocks is translated to arrays of Javascript objects referring to actions and cues that are linked by index and uploaded to Firebase for real-time access on multiple devices.

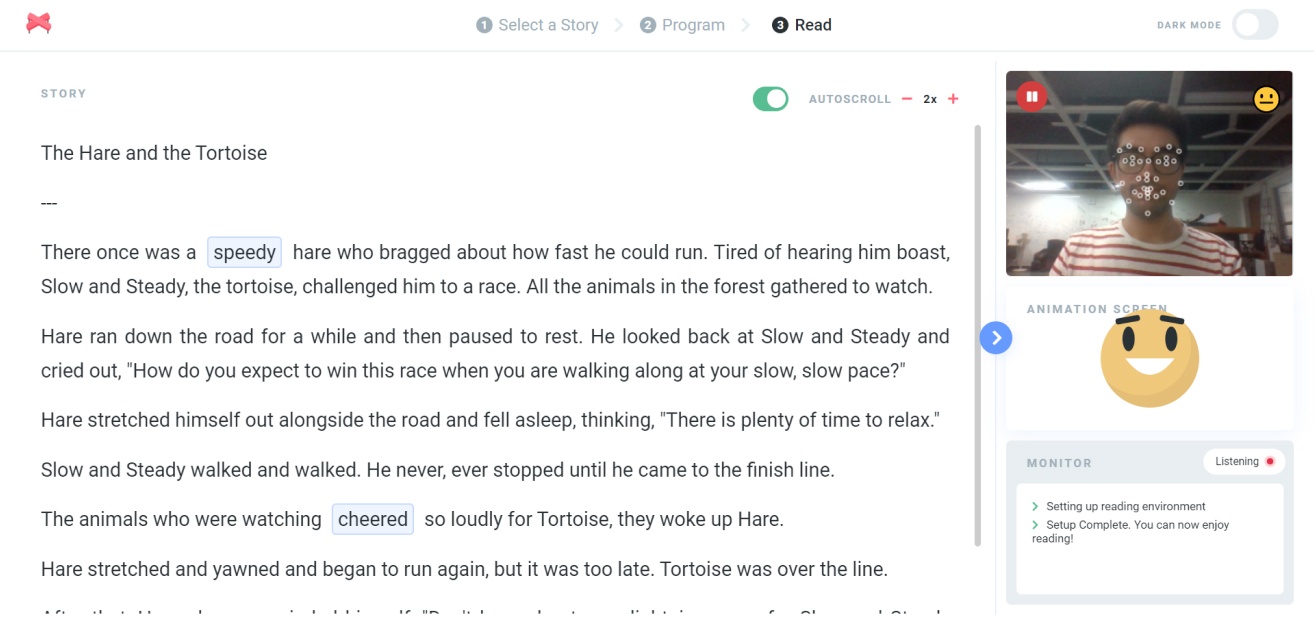

3. Reading

Reading stage screen

Finally, the user can experience the digital storytelling experience that s/he programmed using Aesop. Since we incorporated multiple modalities, the reading mode is designed to be dynamic. First, the user receives a prompt to check the connected devices based on the modalities used in the program. Next, the user observes the reading screen with the story written in a large readable font. The keywords on the story are highlighted according to the program and light up when the particular word is said to indicate the detection of the cue. Other dynamic feedback windows such as animation preview, expression detector displaying the live feed from the front camera are placed in a collapsible panel on the right. We designed the reading screen such that the user is comfortable reading the story through their laptop, tablet or even a storybook with Aesop running on the side. It implements a dark mode for night time stories for a kid by a parent. The user can also enable and control the speed for autoscroll while reading.

We use the WebSpeechAPI to detect speech and Affectiva's canvas based API implementation for expression detection. The real-time results from these are matched with the programmed cues loaded from Firebase and the corresponding actions are executed if the match is true.

Evaluation

User Studies

To validate our work, we conducted user studies with 14 adults between the ages of 19 and 45. Though most users were able to complete all the tasks and reported the tool as fairly easy to use, we observed the following limitations with the tool:

Limitations

- Speech detection is senstive to background noise and requires quiet surroundings to work efficiently

- Some homophones used as cues may be detected incorrectly during narration

- A more diverse library of animations, sound effects, and stories is required to reduce user effort

Final Thoughts

Playing around with the working prototype in all its glory, one could definitely create an engaging storytelling experience at home. If scaled, this could encourage a community of storytellers sharing their programmed stories on the platform. Additionally, such tools can be used for experiential learning and teaching children. Multi-sensory engagement through stories can also be used to enhance communication with children who have learning disabilities.

Aesop was presented at UIST 2019 as a poster.