Sign Language Translation

Translating real-time american sign language to speech with an ordinary camera.

Tags

Machine Learning

Timeline

Dec 2018

Tools & Stack

Python

OpenCV

Keras, Tensorflow

As my minor technical project at my undergraduate university, I decided to work on American Sign Language Translation. I had always wanted to implement this idea because I believed the technology to solve this problem exists and could actually create an impact in some people's daily life.

Brainstorming

Most existing robust translators require additional setup — hand-wearable devices (gloves, rings) or specialized cameras such as depth-sensing cameras (used by Leap Motion) or kinect. However, if we want a solution that can be used anytime and anywhere, we cannot expect people to carry a glove or dedicated hardware for this purpose. Hence, we wanted to implement an algorithm/approach that could work with an ordinary mobile phone camera or webcam.

Problem

How to design an ideal sign language translator that can fulfill the role of a human interpreting sign real-time?

An ideal translator should be equivalent to a trained individual interpreting sign real-time looking at the person. For this, it needs to:

- Identify hand signal real time as the signal is made and held

- Use the sequence of classified signals to frame the intended sentence

- Speak the interpreted sentence once the actions are completed

- Filter out noise by understading context

- Should not require specialized hardware setup to run

Development

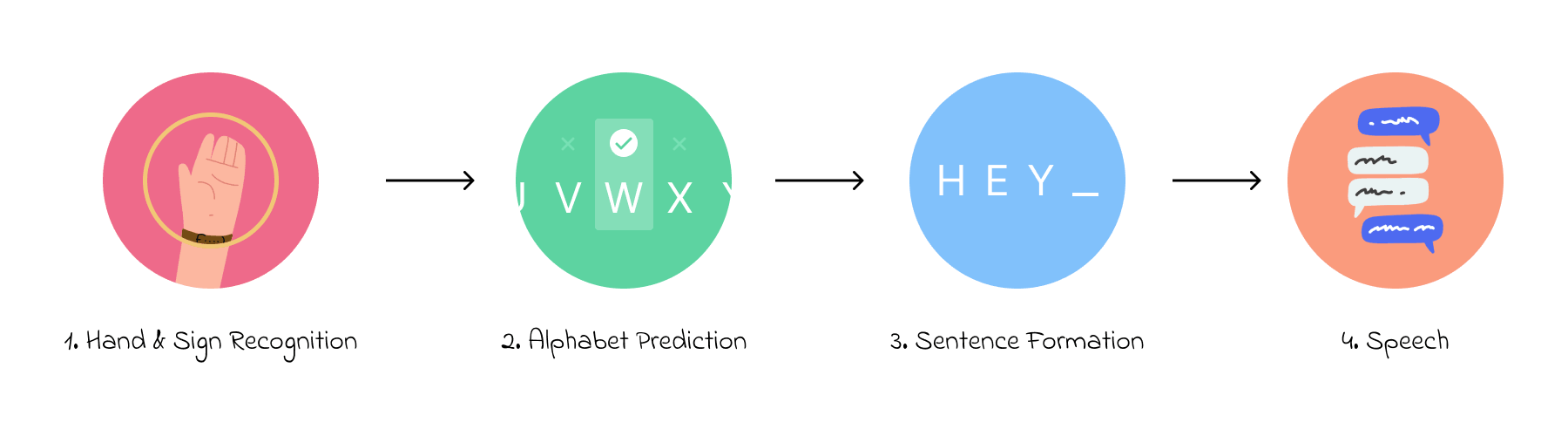

The Implementation

1. Recognising the hand

We spent some time trying to identify where the hand is in the full-body frame using hand recognition algorithms (Haar files trained to capture the hand) but soon realised that doing that would exponentially decrease the accuracy of identifying a sign for two reasons. First, the hand recognition was not robust enough when interfered with some background noise, and since the rest of algorithm relies on it correctly cropping the hand from the frame, there was not much scope for error. Secondly, there are signs such as the letter S (represented by a simple fist) and D (represented by pointing a finger) which closely resemble everyday signs used by people, so the translator can pick those signals from the frame even though they are not intended for communication.

2. Predicting the alphabets

This led us to dedicate an area for signal classification in the frame (depending on the person being left-handed or right-handed). Knowing where the hand is, we could then focus on predicting accurately the alphabet that the hand represents. We trained and saved a CNN model on a dataset we created by asking different people to sign in front of different backgrounds. We iterated our model parameters and improved our dataset till we achieved over 98% accuracy in identifying random test signals. We, then loaded our saved model to make predictions real-time.

3. Generating a sentence

The next step was to sample this prediction appropriately and add it sequentially to form a sentence. The sampling had to be done carefully to avoid the noise and use the signal which remains steady for a short duration till it changes. To implement it, we created sets of 15 predictions using 15 frames (approximately one second) and saw if the predictions were consistent accross most frames. If they were consistent, the prediction was added to the sentence and if it kept varying, nothing was added. Then, we used word segmentation on the formed sentence to add appropriate spaces real-time.

4. Converting to speech

The only challenge in speech conversion was to identify when to speak the generated sentence. It is intuitive that once the person stops signing, the translator should speak the identified sentence. We set up a timer everytime a new prediction was added to the sentence. Knowing that a sign takes approximately 1-2 seconds to be added, if there was no new predicted character in five seconds, the translator would speak the sentence.

Demo

The Working Prototype

Here, you can view the working prototype built with Python, OpenCV, and Tensorflow (Keras)